Nate Silver (who else?) has written a great piece on weather prediction -- "The Weatherman is Not a Moron" (NYT) -- that covers both the proliferation of data in weather forecasting, and why the quantity of data alone isn't enough. What intrigued me though was a section at the end about how to communicate the inevitable uncertainty in forecasts:

...Unfortunately, this cautious message can be undercut by private-sector forecasters. Catering to the demands of viewers can mean intentionally running the risk of making forecasts less accurate. For many years, the Weather Channel avoided forecasting an exact 50 percent chance of rain, which might seem wishy-washy to consumers. Instead, it rounded up to 60 or down to 40. In what may be the worst-kept secret in the business, numerous commercial weather forecasts are also biased toward forecasting more precipitation than will actually occur. (In the business, this is known as the wet bias.) For years, when the Weather Channel said there was a 20 percent chance of rain, it actually rained only about 5 percent of the time.

People don’t mind when a forecaster predicts rain and it turns out to be a nice day. But if it rains when it isn’t supposed to, they curse the weatherman for ruining their picnic. “If the forecast was objective, if it has zero bias in precipitation,” Bruce Rose, a former vice president for the Weather Channel, said, “we’d probably be in trouble.”

My thought when reading this was that there are actually two different reasons why you might want to systematically adjust reported percentages ((ie, fib a bit) when trying to communicate the likelihood of bad weather.

But first, an aside on what public health folks typically talk about when they talk about communicating uncertainty: I've heard a lot (in classes, in blogs, and in Bad Science, for example) about reporting absolute risks rather than relative risks, and about avoiding other ways of communicating risks that generally mislead. What people don't usually discuss is whether the point estimates themselves should ever be adjusted; rather, we concentrate on how to best communicate whatever the actual values are.

Now, back to weather. The first reason you might want to adjust the reported probability of rain is that people are rain averse: they care more strongly about getting rained on when it wasn't predicted than vice versa. It may be perfectly reasonable for people to feel this way, and so why not cater to their desires? This is the reason described in the excerpt from Silver's article above.

Another way to describe this bias is that most people would prefer to minimize Type II Error (false negatives) at the expense of having more Type I error (false positives), at least when it comes to rain. Obviously you could take this too far -- reporting rain every single day would completely eliminate Type II error, but it would also make forecasts worthless. Likewise, with big events like hurricanes the costs of Type I errors (wholesale evacuations, cancelled conventions, etc) become much greater, so this adjustment would be more problematic as the cost of false positives increases. But generally speaking, the so-called "wet bias" of adjusting all rain prediction probabilities upwards might be a good way to increase the general satisfaction of a rain-averse general public.

The second reason one might want to adjust the reported probability of rain -- or some other event -- is that people are generally bad at understanding probabilities. Luckily though, people tend to be bad about estimating probabilities in surprisingly systematic ways! Kahneman's excellent (if too long) book Thinking, Fast and Slow covers this at length. The best summary of these biases that I could find through a quick Google search was from Lee Merkhofer Consulting:

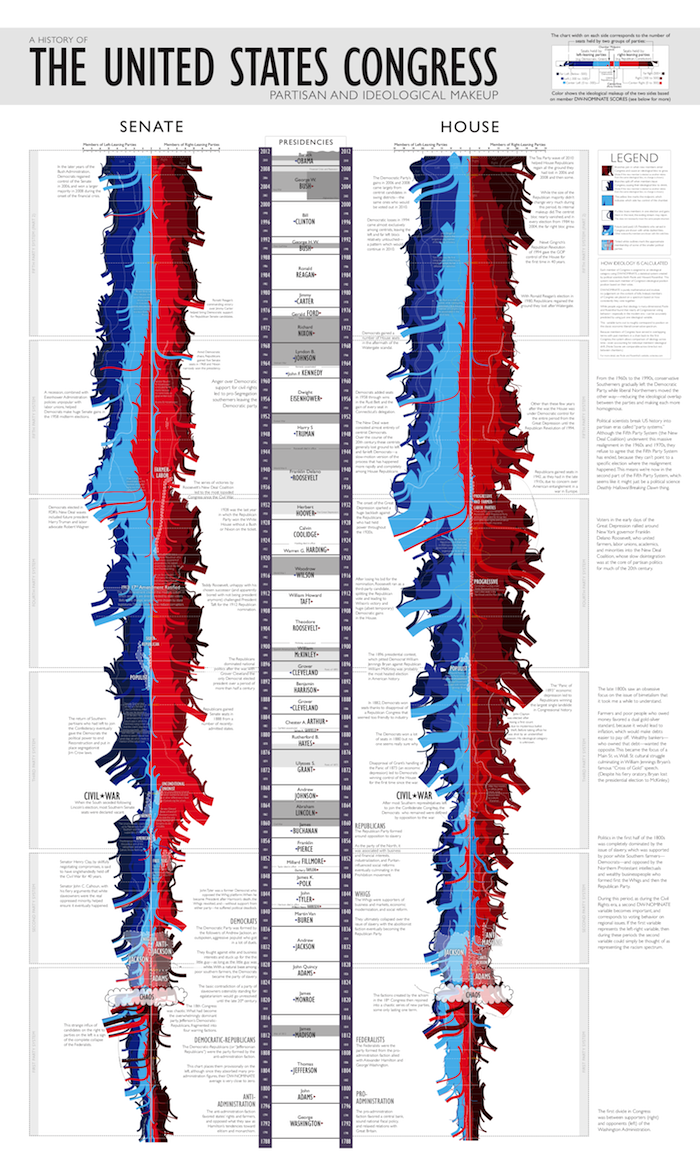

Studies show that people make systematic errors when estimating how likely uncertain events are. As shown in [the graph below], likely outcomes (above 40%) are typically estimated to be less probable than they really are. And, outcomes that are quite unlikely are typically estimated to be more probable than they are. Furthermore, people often behave as if extremely unlikely, but still possible outcomes have no chance whatsoever of occurring.

The graph from that link is a helpful if somewhat stylized visualization of the same biases:

In other words, people think that likely events (in the 30-99% range) are less likely to occur than they are in reality, that unlike events (in the 1-30% range) are more likely to occur than they are in reality, and extremely unlikely events (very close to 0%) won't happen at all.

My recollection is that these biases can be a bit different depending on whether the predicted event is bad (getting hit by lightning) or good (winning the lottery), and that the familiarity of the event also plays a role. Regardless, with something like weather, where most events are within the realm of lived experience and most of the probabilities lie within a reasonable range, the average bias could probably be measured pretty reliably.

So what do we do with this knowledge? Think about it this way: we want to increase the accuracy of communication, but there are two different points in the communications process where you can measure accuracy. You can care about how accurately the information is communicated from the source, or how well the information is received. If you care about the latter, and you know that people have systematic and thus predictable biases in perceiving the probability that something will happen, why not adjust the numbers you communicate so that the message -- as received by the audience -- is accurate?

Now, some made up numbers: Let's say the real chance of rain is 60%, as predicted by the best computer models. You might adjust that up to 70% if that's the reported risk that makes people perceive a 60% objective probability (again, see the graph above). You might then adjust that percentage up to 80% to account for rain aversion/wet bias.

Here I think it's important to distinguish between technical and popular communication channels: if you're sharing raw data about the weather or talking to a group of meteorologists or epidemiologists then you might take one approach, whereas another approach makes sense for communicating with a lay public. For folks who just tune in to the evening news to get tomorrow's weather forecast, you want the message they receive to be as close to reality as possible. If you insist on reporting the 'real' numbers, you actually draw your audience further from understanding reality than if you fudged them a bit.

The major and obvious downside to this approach is that people know this is happening, it won't work, or they'll be mad that you lied -- even though you were only lying to better communicate the truth! One possible way of getting around this is to describe the numbers as something other than percentages; using some made-up index that sounds enough like it to convince the layperson, while also being open to detailed examination by those who are interested.

For instance, we all the heat index and wind chill aren't the same as temperature, but rather represent just how hot or cold the weather actually feels. Likewise, we could report some like "Rain Risk" or "Rain Risk Index" that accounts for known biases in risk perception and rain aversion. The weather man would report a Rain Risk of 80%, while the actual probability of rain is just 60%. This would give us more useful information for the recipients, while also maintaining technical honesty and some level of transparency.

I care a lot more about health than about the weather, but I think predicting rain is a useful device for talking about the same issues of probability perception in health for several reasons. First off, the probabilities in rain forecasting are much more within the realm of human experience than the rare probabilities that come up so often in epidemiology. Secondly, the ethical stakes feel a bit lower when writing about lying about the weather rather than, say, suggesting physicians should systematically mislead their patients, even if the crucial and ultimate aim of the adjustment is to better inform them.

I'm not saying we should walk back all the progress we've made in terms of letting patients and physicians make decisions together, rather than the latter withholding information and paternalistically making decisions for patients based on the physician's preferences rather than the patient's. (That would be silly in part because physicians share their patients' biases.) The idea here is to come up with better measures of uncertainty -- call it adjusted risk or risk indexes or weighted probabilities or whatever -- that help us bypass humans' systematic flaws in understanding uncertainty.

In short: maybe we should lie to better tell the truth. But be honest about it.