Note: I've edited the original title of this post to tone it down a bit.

World Vision has recently come under fire for their plan to send 100,000 NFL t-shirts printed with the losing Super Bowl team to the developing world. This gifts-in-kind strategy was criticized by many bloggers -- good summaries are at More Altitude and Good Intentions are Not Enough. Saundra S. of Good Intentions also explained why she thinks there hasn't been as much reaction as you might expect in the aid blogosphere:

So why does Jason, who did not know any better, get a barrage of criticism. Yet World Vision, with decades of experience, does not? Is it because aid workers think that the World Vision gifts-in-kind is a better program? No, that’s not what I’m hearing behind the scenes. Is it because World Vision handled their initial response to the criticism better? That’s probably a small part of it, I think Jason’s original vlog stirred up people’s ire. But it’s only a small part of the silence. Is it because we are all sick to death of talking about the problems with donated goods? That’s likely a small part of it too. I, for one, am so tired of this issue that I’d love to never have to write about it again.

But in the end, the biggest reason for the silence is aid industry pressure. I’ve heard from a few aid workers that they can’t write - and some can’t even tweet – about the topic because they either work for World Vision or they work for another nonprofit that partners with World Vision. Even people that don’t work for a nonprofit are feeling pressure. One independent blogger told of receiving emails from friends that work at World Vision imploring them not to blog about the issue.

While I was one of the critical commentators on the original World Vision blog post about the NFL shirt strategy, I haven't written about it yet here, and I feel compelled by Saundra S.'s post to do so. [Disclosure: I've never worked for World Vision even in my consulting work and -- since I'm writing this -- probably never will, so my knowledge of the situation is gleaned solely from the recent controversy.]

And now World Vision has posted a long response to reader criticisms, albeit without actually linking to any of those criticisms -- bad netiquette if you ask me. Saundra S. responds to the World Vision post with this:

Easy claims to make, but can you back them up with documentation? Especially since other non-profits of similar size and mission - Oxfam, Save the Children, American Red Cross, Plan USA - claim very little as gifts-in-kind on their financial statements. So how is it that World Vision needs even more than the quarter of a billion dollars worth of gifts-in-kind each year to run their programs? To be believed, you will need to back up your claims with documentation including: needs assessments, a market analysis of what is available in the local markets and the impact on the market of donated goods (staff requests do not equal a market analysis), an independent evaluation of both the NFL donations (after 15 years you should have done at least one evaluation) and an independent evaluation of your entire gifts-in-kind portfolio. You should also share the math behind how World Vision determined that the NFL shirts had a Fair Market Value - on the date of donation - of approximately $20 each. And this doesn't even begin to hit on the issues with World Vision's marketing campaigns around GIK. Why keep perpetuating the Whites in Shining Armor image.

So to summarize Saundra S.'s remaining questions:

1. Can WV actually show that they rigorously assess the needs of the communities they work in for gift-in-kind (GIK)? especially beyond just "our staff requested them"?

2. Why does WV use a much larger share of GIK than other similarly sized nonprofits.

3. Has WV tried to really evaluate the results of this program? (If not, that's ridiculous after 15 years.)

4. How did WV calculate the 'fair market value' for these shirts? (This one has an impact on how honestly WV is marketing itself and its efficiency.)

Other commenters at the WV response (rgailey33 and "Bill Westerly") raise further questions:

5. Does WV know / care where the shirts come from and how their production impacts people?

6. Rather than apparently depending on big partners like the NFL to help spread the word about WV is doing and, yes, drive more potential donors to WV's website (not in itself a bad thing) shouldn't they be doing more to help partners like the NFL -- and the public they can reach -- realize that t-shirts aren't a solution to global poverty? After all, wouldn't it be much more productive to include the NFL in a discussion of how to reform the global clothing and merchandise industries to be less exploitative?

7. WV must have spent a lot of money shipping these things... isn't there something better they could do with all that money? And expanding on that:

Opportunity cost, opportunity cost, opportunity cost. The primary reason I'm critical of World Vision is that there are so many things they could be doing instead!

For a second, let's assume that GIK doesn't have any negative or positive effects -- let's pretend it has absolutely no impact whatsoever. (In fact, this may be a decently good approximation of reality.) Even then, WV would have to account for how much they spent on the programs. How much did WV spend in staff time, administrative costs like facilities, and field research by their local partners coordinating donations with NFL and other corporate groups? On receiving, sorting, shipping, paying import taxes, and distributing their gifts-in-kind? If they've distributed 375,000 shirts over the last few years, and done all of the background research they describe as being necessary to be sensitive to local needs... I'm sure it's an awful lot of money, surely in the millions.

Amy at World Vision is right that their response will likely dispel some criticism, but not all. But that's not because we critics are a particularly cantankerous bunch -- we just think they could be doing better. Her response shows that, at least in one sense, they are a lot better than Jason of the 1 Million Shirts fiasco, if they're spreading the shirts out and doing local research on needs -- but those things are more about minimizing potential harm than they are maximizing impact. In short, World Vision's defense seems to be "hey, what we're doing isn't that bad" when really they should be saying "you know what? there are lots of things we could be doing instead of this that would be much greater impact." So in another way World Vision is much worse than Jason, because they have enough experts on these things to know what they're doing and that this sort of program has very little likelihood of pulling anyone out of poverty, they know there are better things they could be doing with the same money, and they still do it.

To get to why I think that's the case, let's go back to WV's response to the GIK controversy. From Amy:

At the same time, I’ll also let you know that, among our staff, there is a great deal of agreement with some of the criticisms that have been posted here and elsewhere in the blogosphere. In my conversations, I’ve heard overwhelming agreement that product distribution done poorly and in isolation from other development work is, in fact, bad aid. To be sure, no one at World Vision believes that a tee shirt, in and of itself, is going to improve living conditions and opportunities in developing communities. In addition, World Vision doesn’t claim that GIK work alone is sustainable. In fact, no aid tactic, in and of itself, is sustainable. But if used as a tool in good development work, GIK can facilitate good, sustainable development.

There are obviously a lot of well-intentioned and smart people at World Vision, and from this it sounds like there are differences of opinion as to the value of GIK aid. One charitable way of looking at the situation is to assume that employees at WV who doubt the program's impact justify its use as a marketing tool -- but if that's the case they should classify it as a marketing expense, not a programmatic one. But I imagine the doubts run deeper, but it's pretty hard for someone at any but the most senior of levels to greatly change things from inside the organization, because it's simply too ingrained in how WV works. Clusters of jobs at WV are probably devoted to tasks related to this part of their work: managing corporate partnerships, coordinating the logistics of the donations, and coordinating their distribution.

One small hope is that this controversy is giving cover to some of those internal critics, as the bad publicity associated with it may negate the positive marketing value they normally get from GIK programs. Maybe a public shaming is just what is needed?

[I really hope I get to respond to this post in 6 months or a year and say that I was wrong, that World Vision has eliminated the NFL program and greatly reduced their share of GIK programs... but I'm not holding my breathe.]

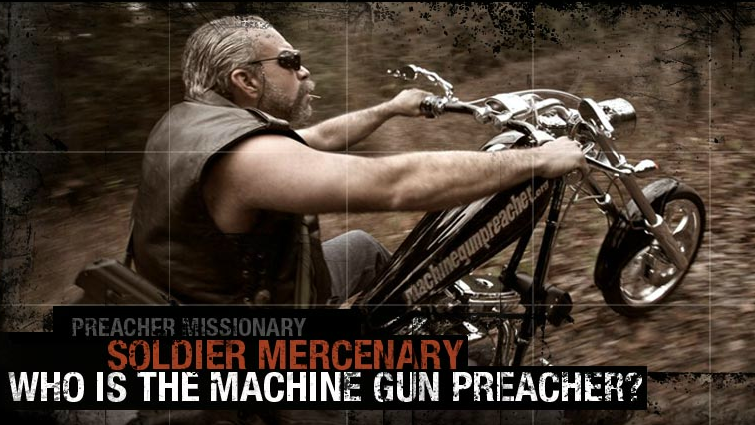

He goes by many names, Reverend Sam and the “Machine Gun Preacher” amongst them. If you haven’t heard much from Sam Childers, you will soon. To date he’s been featured in a few mainstream publications, but most of his exposure has come from forays into Christian media outlets and cross-country speaking tours of churches. In 2009 he published his memoir, Another Man’s War. But Childers is about to become much better known: his life story is being made into a movie titled Machine Gun Preacher. It hits the big screen this September, starring Gerard Butler (300) and directed by Oscar-winner Marc Forster (Monster’s Ball, Quantum of Solace).

He goes by many names, Reverend Sam and the “Machine Gun Preacher” amongst them. If you haven’t heard much from Sam Childers, you will soon. To date he’s been featured in a few mainstream publications, but most of his exposure has come from forays into Christian media outlets and cross-country speaking tours of churches. In 2009 he published his memoir, Another Man’s War. But Childers is about to become much better known: his life story is being made into a movie titled Machine Gun Preacher. It hits the big screen this September, starring Gerard Butler (300) and directed by Oscar-winner Marc Forster (Monster’s Ball, Quantum of Solace).